Navigating the Digital Service Act: an 8-step compliance guide

We built an eight-step guide to assist you with assessing if you fall under the DSA, assessing the obligations applicable to you and assisting you with the preparation where necessary.

By Annemarie Bloemen

Expertise: Data & Digital Services

25.01.2024

From 17 February 2024, many digital service providers will have to deal with a new EU law – the Digital Services Act (DSA). The goal of this DSA is to ensure online safety and fairness.

Not all rules are new – a number of obligations are familiar, such as on notice and takedown of illegal content and the obligation to be clear about restrictions in your terms.

The DSA harmonizes and sets more standards for content moderation efforts and for transparency towards users, authorities and the public. You may for instance have to become transparent about the number of take-down notices you receive. The DSA also provides rules for dispute resolution around content moderation and trader verification and introduces so-called ‘trusted flaggers’ whose notices of illegal or infringing content should be prioritized. Not complying with the DSA may have consequences for the safety and fairness of your business, but can also have legal consequences such as fines or liabilities.

The DSA has a layered approach: the bigger your online commercial role and the possible societal effects of your online business, the more you need to do. In this scope, the DSA already applies to and strongly regulates the so-called Very Large Online Platforms and Very Large Online Search Engines, such as Google Search, Instagram and TikTok. Due to the risks and consequences of their online practices in the EU they have been required since August 2023 to set-up vast risk and compliance programs to manage these risks (not part of this Guide). Similarly, under this layered approach, small sized enterprises are exempted from most of the DSA’s obligations.

We built an eight-step guide to assist you with assessing if you fall under the DSA, assessing the obligations applicable to you and assisting you with the preparation where necessary.

Interested in learning more? Register for our breakfast session on February 29th to explore the DSA in practice. Enjoy insightful discussions over coffee and croissants. Click here to sign up!

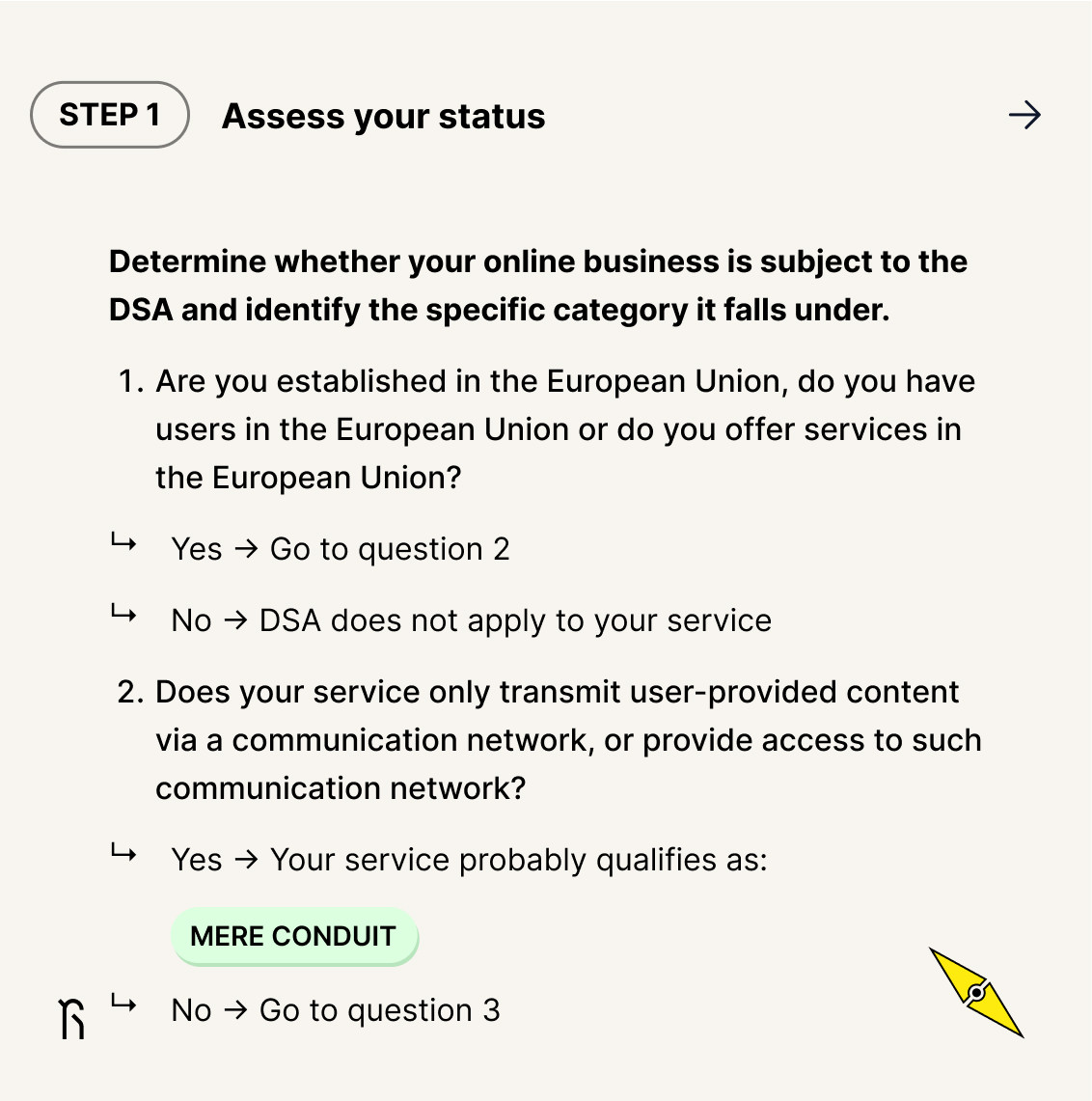

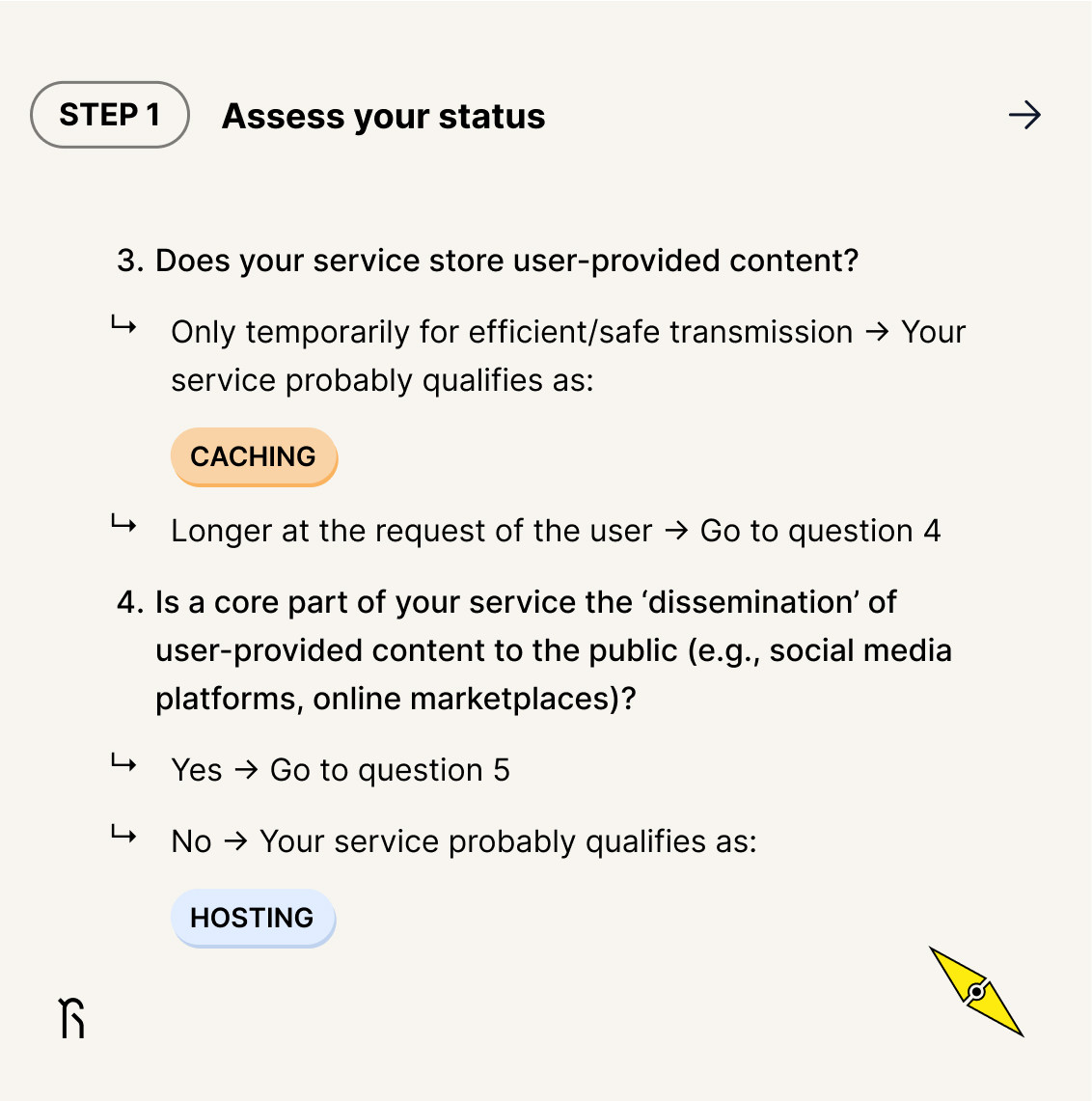

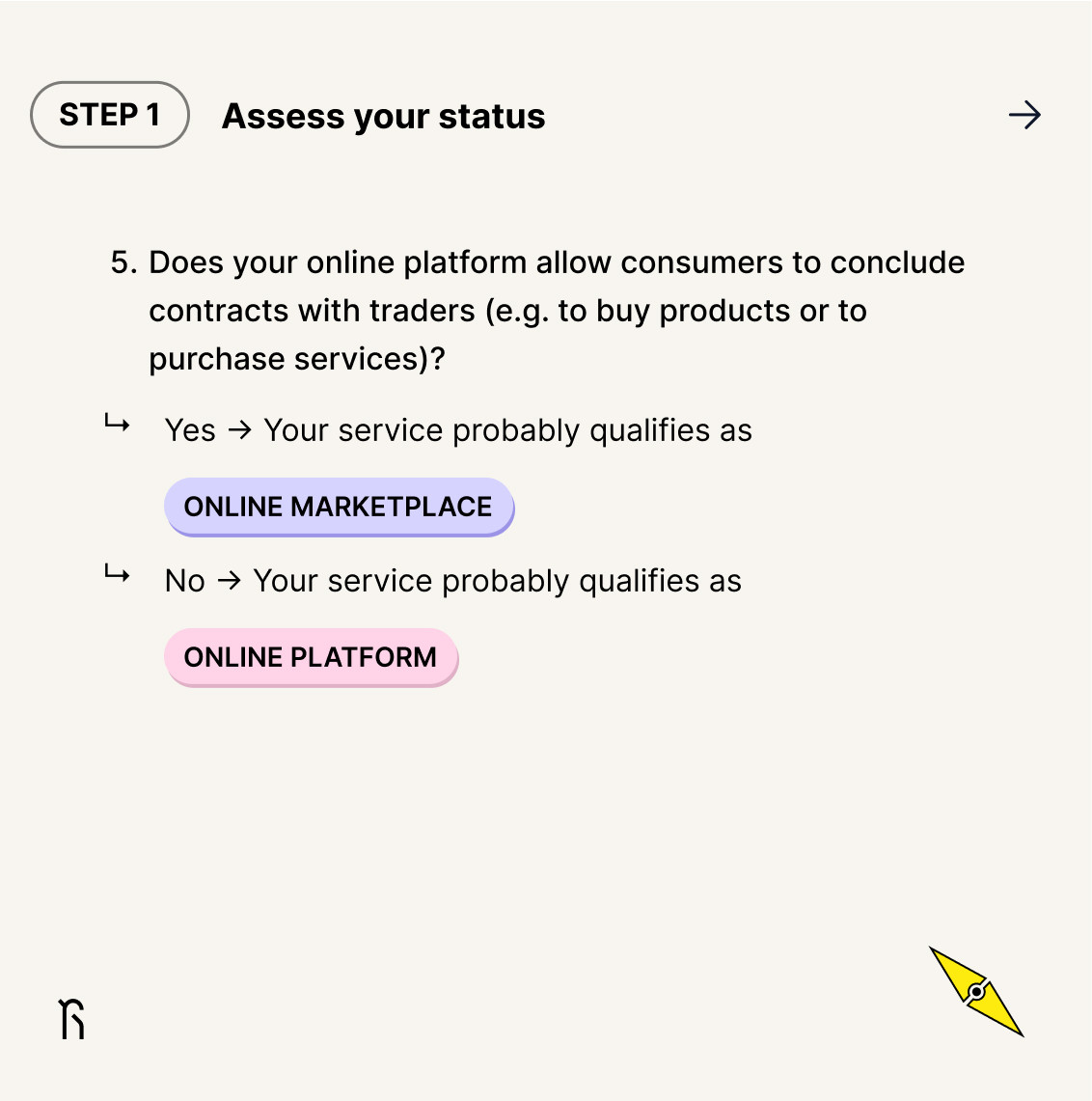

Step 1: Assess your status – do you fall under the DSA, and if so, which category?

You need to decide to which layer/category your online business belongs. Use this checklist to guide you through:

Step 2: Establish vital contact points

You need to establish two key contact points:

- Contact Point for Supervisory Authorities: This involves setting up a clear communication channel between you and regulatory bodies. Key aspects include:

- Pro-active: Publishing easily accessible contact information in an EU language on your platform.

- Re-active: Promptly responding to orders for the removal of illegal content.

- Re-active: Providing detailed, timely responses to authorities' information requests about individual service users, ensuring affected users are also notified.

- Contact Point for Users: You should also set up a contact point for service users. This includes a telephone number, email address, electronic contact form, chatbot (clearly identified as such) or instant messaging. Communication with the users should be timely and efficient.

Step 3: Be transparent about your rules on content moderation. Use content moderation fairly

The DSA underscores the importance of transparency in the form of your terms and conditions. Key aspects include:

- Clarity and accessibility – a possible update of your terms: Your terms should be user-friendly and clearly outline service usage and restrictions. This includes rules you will already have – e.g. on acceptable use and the restrictions around posting illegal content or content which infringes the rights of other. For services targeting children, language should be age-appropriate.

- Content moderation and complaints handling – a possible update of your terms: Your terms should include detailed rules and processes for content moderation and handling internal complaints. Content moderation and complaints handling should happen in an objective, proportional, and diligent manner, considering the interests of all parties involved, specifically their fundamental rights.

- Suspension – a possible update of your terms and an internal process for recognizing and suspending frequent violators or misusers: You must outline in your terms the circumstances for suspending users who regularly post obviously illegal content or misuse your complaint system by frequently providing unfounded notices (explained below). In short, you need to provide a warning first, and base your decision to suspend on all pertinent factors, including the absolute and relative frequency of abuses, the severity of the abuses, and, if known, the user's intent.

- Recommender system disclosure – a possible update of your terms: Also describe the main parameters of your service's recommender systems in your terms, including how users can influence these parameters, focusing on the most significant criteria and their relative weight.

Step 4: Implement or update your notice and action procedures

The DSA mandates the creation of a user-friendly and digital system for people to report illegal content, with several key requirements:

- Confirm receipt – a possible update of your notice-and-takedown/action process: You should confirm receipt of reports when contact details are available and inform users of decisions taken. Additionally, you must inform the notifier if the decision was made through automated means.

- Decisions – this should not be new: Decisions on reports should be made in a non-arbitrary, objective manner within a reasonable timeframe.

- Reasons for action – a possible update of your notice-and-takedown/action process: When addressing illegal content or terms of service violations, you should provide clear, specific reasons for actions taken, as much as possible given the situation. This does not apply if user contact information is unavailable or in cases involving large amounts of misleading commercial content.

- Report serious criminal offences – this should not be new: You should also report suspected criminal offenses threatening life or safety to relevant authorities in a member state, or to Europol if the appropriate member state is unclear.

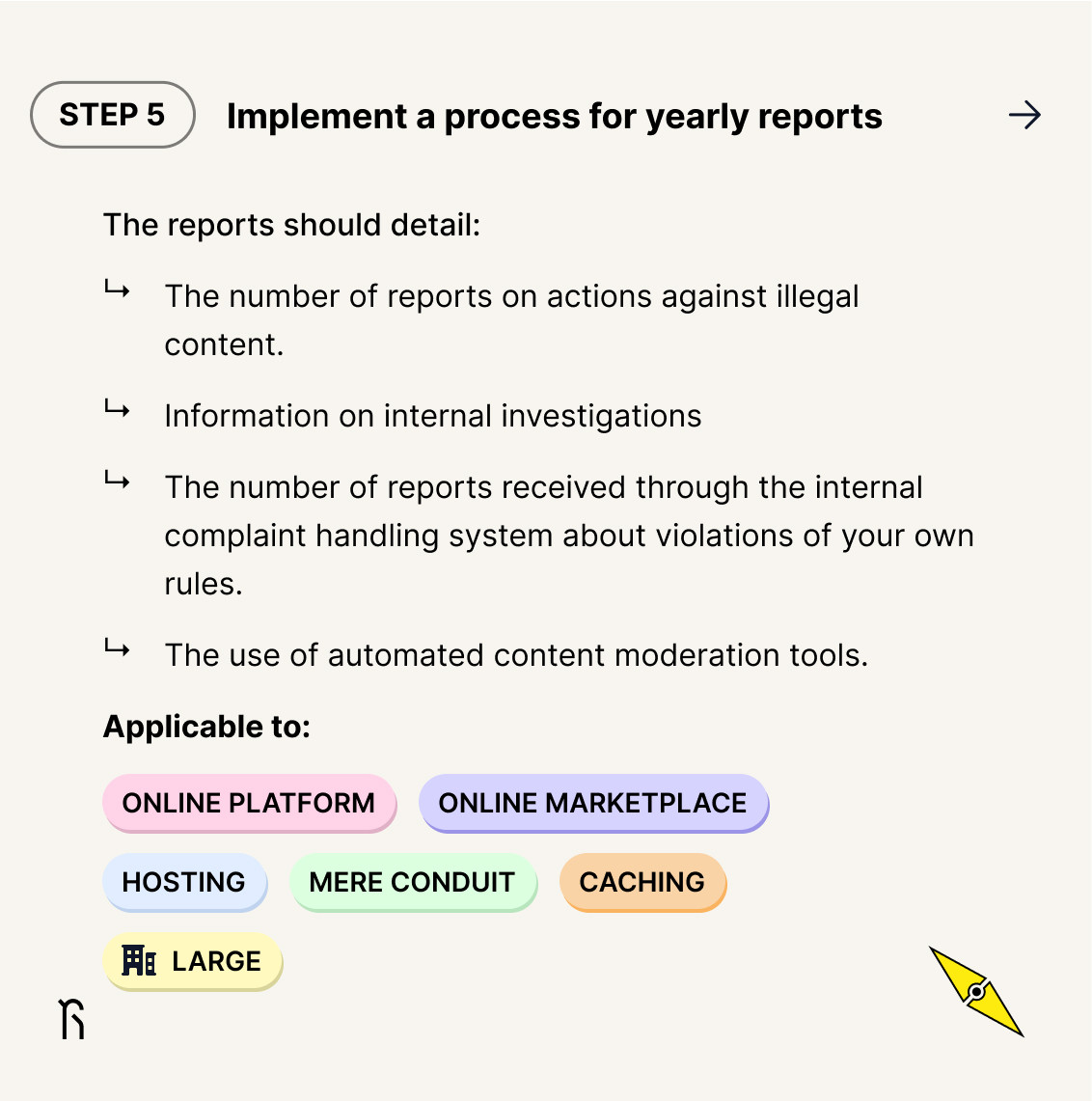

Step 5: Implement a process for yearly reports

Yearly transparency – start reporting on aggregated notice-and-action information. The DSA requires an annual, clear and machine-readable report on content moderation, detailing:

The number of reports on actions against illegal content, including complaint reasons, decisions, median response time, and reversed decisions.

Information on internal investigations, including the number initiated, training for content moderators, and decisions affecting content availability, visibility, and accessibility, broken down by content type, rule violations, detection methods, and restrictions applied.

The number of reports received through the internal complaint handling system about violations of your own rules.

The use of automated content moderation tools, with details on deployment, indicators, and accuracy.

The number of notice-and-action requests, categorized by content type, origin (including Trusted Flaggers, designated entities to flag illegal content), actions taken, legal or terms-based decisions, automated responses, and median response time.

The number of reports received through the internal complaint handling system, including the reasons for the complaints, the decisions made, the median response time, the number of decisions subsequently reversed.

The number of suspensions of users who frequently post obviously illegal content or abuse the complaint-handling procedure.

The number of external dispute resolution procedures, the outcome of those procedures as well as the median time needed to complete the procedures.

Step 6: Implement or update your internal complaint-handling system

Start or update your internal complaint-handling system. The DSA requires a comprehensive complaints system to allow people who (i) have filed a notice or (ii) are affected through content moderation to file a complaint against certain decisions. Furthermore, complaints from experts (the so-called 'Trusted Flaggers') should be given priority. Your complaint-handling procedure must have the following features:

Complaint submission and handling:

People should be able to lodge complaints within six months of your decision.

The system must be easy to locate, user-friendly, and enable submission of precise and well-supported complaints.

Decision making process:

Decisions on complaints should be made carefully, objectively, and without arbitrary judgment within a reasonable timeframe.

If a complaint provides sufficient evidence showing the initial decision was incorrect, you must reverse this decision promptly.

Clearly communicate the reasons for each decision to the complainant and include information about dispute resolution and other appeal processes.

Decisions must not be solely automated and should be under the responsibility of qualified employees.

Dispute resolution:

Users should be informed of their right to choose any certified out-of-court dispute resolution body for settling disputes that arise from the handling of a complaint. Ensure that information about these dispute resolution options is clear, user-friendly, and easily accessible.

Both the platform and complainant are expected to cooperate in good faith in the dispute resolution process. You may refuse to participate in dispute resolution if the complaint is based on the same information and grounds of illegality that have already been addressed.

Priority handling and monitoring of Trusted Flaggers:

You should implement technical and organizational measures to prioritize and handle complaints from Trusted Flaggers swiftly.

If a Trusted Flaggers consistently submits unfocused, erroneous, or unsubstantiated complaints, you should inform the Digital Services Coordinator.

Step 7: Adopt or update your advertising interface

The DSA sets forth requirements to foster user-centric and ethical digital service design, particularly in interface design and advertising:

No manipulative interface design – possible update of your user interface: Service interfaces must not impair users' freedom to make informed decisions (this is the prohibition of so-called dark patterns).

Transparent targeted advertising – implement real-time transparency: Advertisements must be clearly identified as such, and be provided with real-time information about the advertiser, funding sources, and primary targeting criteria (‘why do I see this add’). Users should also be able to modify these targeting criteria.

User role in content classification – possible update user interface: Parties posting content need to be provided with means to make clear if their content is promotional or commercial.

Profiling restrictions in advertising – this should not be new: Advertisements should not be based on profiling that involves special categories of personal data as per the GDPR,(such as health, race, sexual life or political opinion).

Recommender system options – transparency on personalized feeds and search results: If your platform or marketplace includes a recommender system, you must explain the main parameters used in determining the order of the recommender system and give users the option to modify these parameters.

Increased child protection – in any case – stop showing profiled ads to children: Services should ensure enhanced privacy and safety for children, including limits on profiling-based ads targeting minors.

Data publication – regular reporting on average number of active users: Every six months, you must publish data on your service's interface about the average number of active recipients per month of the service.

Step 8: Adopt or update your marketplace way-of-working and standards

This is what you need to prepare for:

Verifying identity – update your onboarding process and user interface: You should only allow traders on the platform after verifying their identity. The name, address, telephone number, email address, Chamber of Commerce number (if applicable), and self-certification of the merchant should also be published on your platform. Note – for existing traders, you have 12 months to comply with this verification obligation. Traders who have not identified themselves after that date should be suspended from the marketplace.

Inaccurate information – implement a process for trader identity management: If you suspect inaccurate information, you should request clarification from the trader. If they don't respond, you must block them. Blocked traders have the right to appeal following your internal complaint-handling systems.

Trader data – implement a process for trader identity management: Trader data should be retained for up to 6 months after the contractual relationship with the traders ends (based upon your terms). After that the trader data must be deleted. You should share trader data with third parties only as required by law, e.g. in case of authority access requests.

Information requirements – start or update the trader info page: You should configure your platform to help traders meet their pre-contractual information obligations, including publishing address and Chamber of Commerce information. Traders should also (be able to) provide essential product identification information, including a brand/logo, and other mandatory information such as on ingredients. You should make a best effort to ensure the accuracy of information before displaying it on the platform. Afterwards, conduct random checks.

Illegal products/services – start or update a notification process: If a product/service offered is illegal, you must inform consumers who have purchased it in the past 6 months. If consumer identities are unknown, make this information public.

And now what?

As you can read, working towards DSA compliance is not solely a legal job. Depending on where you are on the DSA ladder, it may require involvement of a number of teams, such as customer care, moderators, IT and marketing. These teams need to understand that they are accountable for transparent and fair business practices. Making people aware and responsible will help with that. You can think of drafting knowledge materials such as FAQs, implementing a practical policy and operating an e-learning program for training relevant teams. This should help you to ensure ongoing compliance and effective team collaboration.

In addition, you may need to adopt adequate record keeping procedures to ensure compliance with reporting obligations.

Our team has a lot of experience and can help you do this. We also offer several legal solutions that we can tailor to your needs. Want to know more or have any questions? Feel free to get in touch and we hope to see you during our breakfast meeting 29 February!